BSides København 2024 | Secure by Design: Enhance Application Security, Reduce Developer Frustrations, and Save on Costs

Day of talk: November 09, 2024

Many development teams are not actively thinking about security during their development process, which results in the developers and system architects unintentionally designing vulnerable applications. My talk addressed a practical approach to how to implement "secure by design" into most development processes.

Picture of me presenting the talk

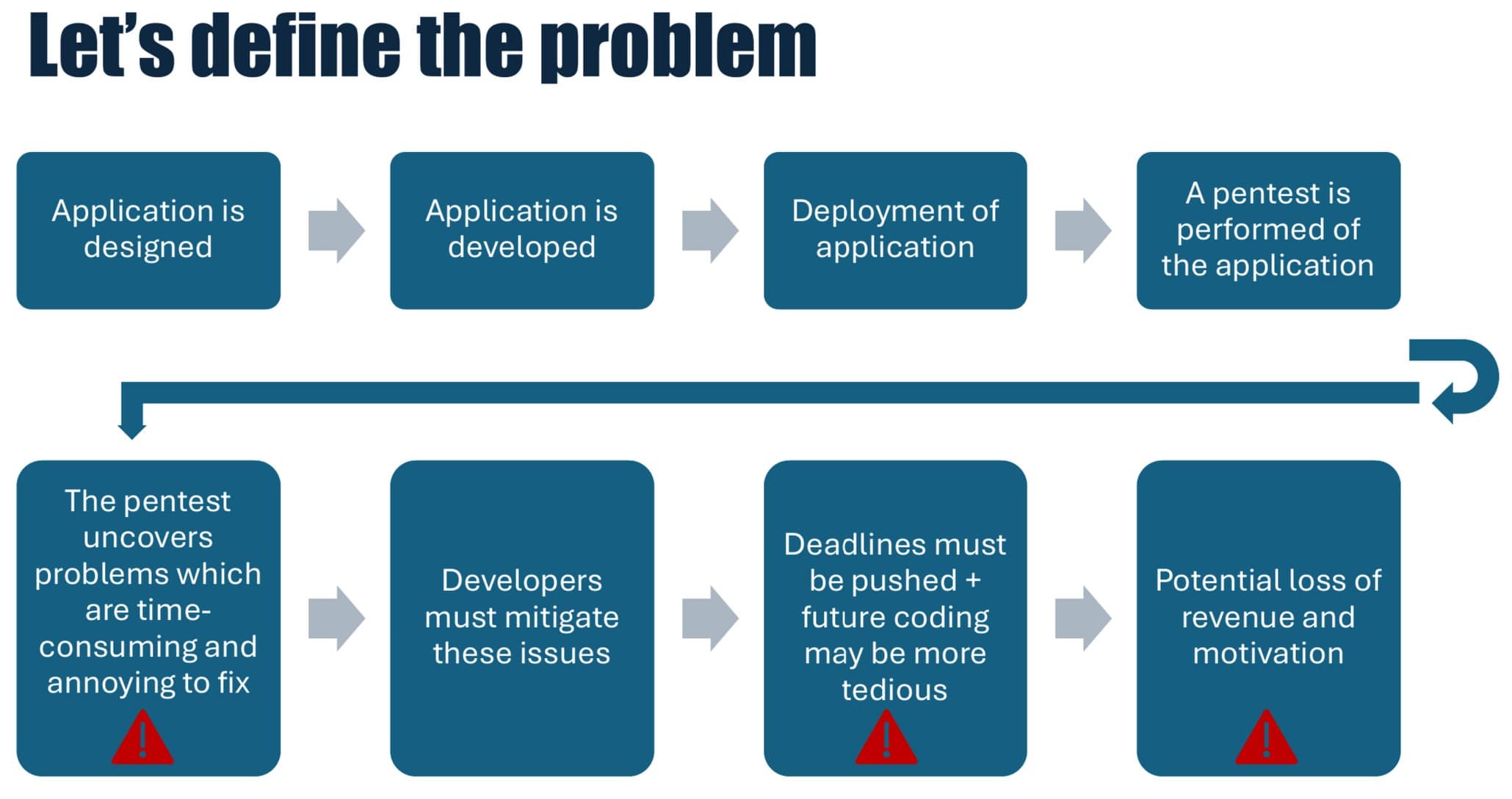

A problem that I often observe, when working as a web application pentester and DevSecOps consultant, is that many development teams are working with security in a reactionary way, instead of being proactive about ensuring that their application is as secure as reasonably possible. A worst-case, but realistic, example is visualized below. Here developers are spending a lot of time on the initial design and problem definition to ensure that the application fulfills the technical requirements, but forget to think about security requirements. Afterward, long periods are used to develop the application. Once the application is ready, it will be deployed and begin to be used in a business-critical way with real data and users.

At some point, the development team is motivated to run a security scan and/or a full application pentest. If security has not been a focus during design and development these tests may find that there are core areas of the application that may not have been designed securely. An example of such a finding could be an improperly built authentication and authorization system, which can be tedious to replace once you are deep into the development process of an application. However, as this may be a critical issue the developers are forced to develop a workaround to secure the application, as total rewrites of insecure application functionality are often not realistic budget-wise. Hence, the developers are forced to take into consideration the workaround well when doing development and maintenance. This will likely complicate the process, making working on the development of the application more frustrating.

The talk addressed actions that can be taken to reduce the likelihood that you will end up in a situation like the one I described above, including a practical approach to use even if your application has been under development for years.

Visualization of the problem which this talk was trying to address.

If you are interested: To see what a web application pentest at minimum will cover

Core concepts:

Let's address some common definitions of the terms used in this article and talk, as there are many slightly different definitions of the core concepts.

"Secure by design" means that an application has been designed with security as a core design focus. Common vulnerability areas have been considered during the design and development of the application, and steps have been taken to remove or mitigate these common pitfalls. Furthermore, technologies have been chosen to reduce the chance of accidentally introducing a vulnerability and other technologies have been used to verify, scan, and block potential attacks and issues.

You may also have heard "secure by default" mentioned, where in this context this means that the default configuration of your application is as secure as reasonably possible. It's better to have your users explicitly lower their security level if they desire than to convince them to take action to increase it.

"Shift left" or "shifting security left" are common buzzwords in the industry at the moment, which means that you move the responsibility of security earlier in the supply chain. In practice, this means that you are implementing our definition of "secure by design", by taking steps to ensure that security is an active part of the development process.

Security frameworks, or security checklists, are lists of controls and recommendations to follow in order to ensure that you have a secure application. The one I highly recommend using is OWASP ASVS (Application Security Verification Standard), as it's a well-written and thought-out document based on a lot of different organizations' input. It's a well-structured 71-page .pdf (v4.0.3) with lots of good recommendations on how to secure your application. You may have felt overwhelmed by the page count, but you don't need to. The authors have placed the various controls into categories which makes it easy to work with. For example, if you are implementing a file upload functionality, then it would take you a couple of seconds to find the relevant section in the report using their table of contents. Another useful note about OWASP ASVS is that it is not possible to get "an official certification stamp" that you comply with this document, which makes it a nice starting point on your "secure by design journey" as you are not forced to comply with all of the controls which may not necessarily fit your application context.

The security framework I recommend: OWASP ASVS

How to implement "secure by design" in new applications:

Now let's address how you can implement "secure by design" in a practical manner. First starting with how you can implement it for a new project.

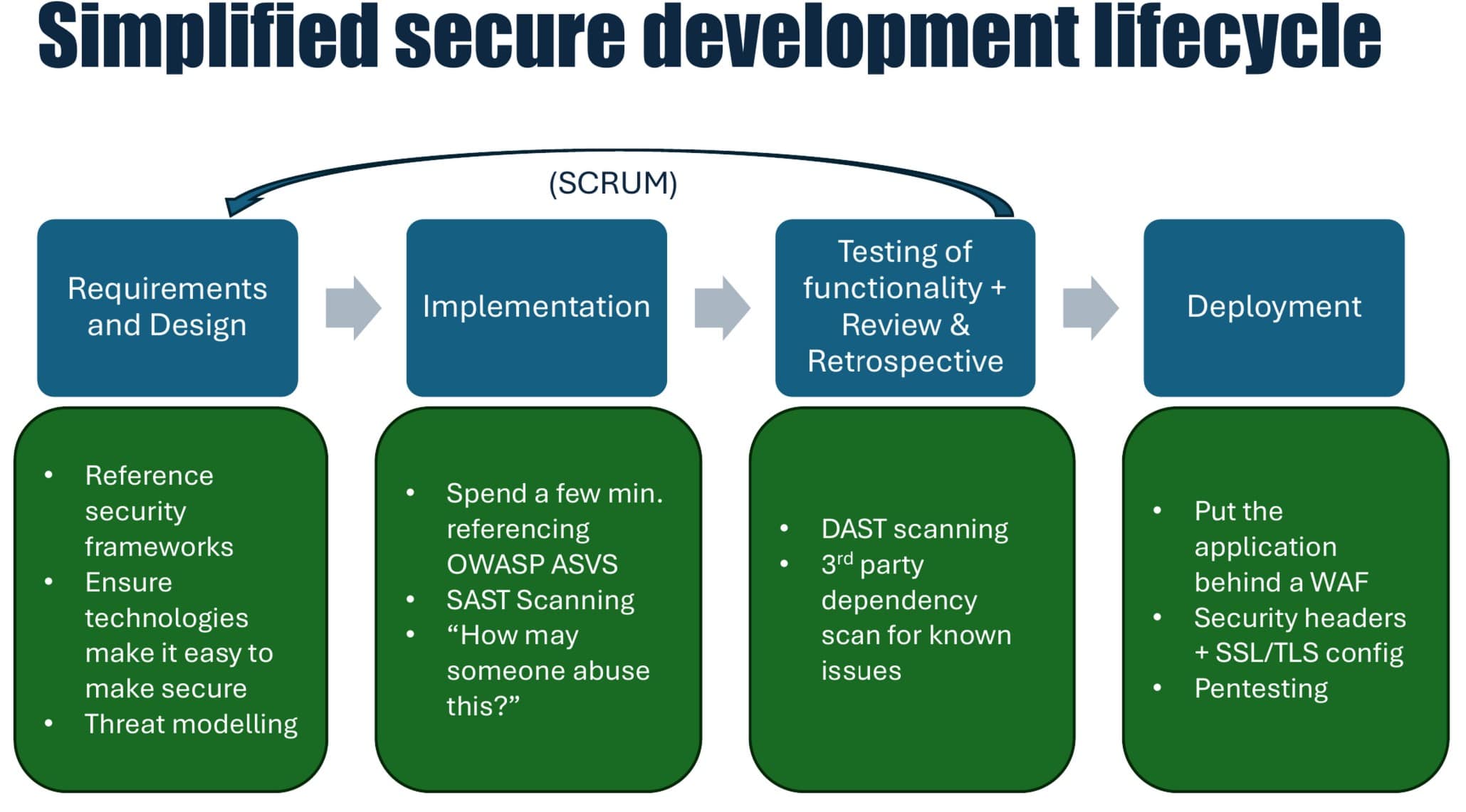

I recommend that the design process should implement threat modeling and referencing security frameworks such as OWASP ASVS for which common pitfalls may occur. Based on these, the software can be designed in a way that mitigates or greatly reduces the chance of security issues occurring in the application. Likewise, it's also recommended to choose technologies and frameworks that make it difficult for the developers to accidentally introduce vulnerabilities. For example, making it required to use parameterized SQL queries greatly reduces the chance of a SQL injection vulnerability being introduced during development.

During development, I suggest that you implement a SAST (static application security testing) tool into your CI/CD pipeline. These are tools that will scan the code for "low hanging fruit" security issues, and function as a nice learning opportunity for the developer that they may just have accidentally written something that may be likely to be insecure. Furthermore, I recommend that teach your developers about of common security issues. With this knowledge adding a point on the task they have to fulfill that forces them to quickly consider "how may someone abuse this?", along with getting them to spend a few minutes referencing relevant sections of OWASP ASVS prior to designing their solution to the task, then I would expect that they would be less likely to accidentally introduce security issues into your application. This would be a few-minute addition at the start of each task, which should likely not be too obtrusive to the developer's current flow.

Once you have a deployable version of the application ready, then it's recommended to use a DAST (dynamic application security testing) tool to scan the application for vulnerabilities that are easy to spot and already known CVEs. If you have a business-critical application, then it's also recommended to get manual pentesting done at regular intervals, as these will be able to cover the application in further depth and with another context than automation would be, especially if you opt for a white-box pentest where the pentester has access to see the source-code for a deeper understanding of the application. During deployment, it's also recommended to put your application behind a WAF (Web Application Firewall), which analyzes the traffic to the application and blocks obviously malicious traffic. Having such a technology protect your application greatly increases the time effort and skill level required to potentially bypass it and attack your application. Very likely this would also result in you getting a lot of logs in case someone is trying to find issues in your application.

Graphic description of the recommended steps in a simplified secure development lifecycle.

In summary, the recommendations for a new project are:

- Perform threat mapping and reference OWASP ASVS during the design phase, and ensure the design mitigates common pitfalls and attacks.

- Ensure that you choose technologies that make it difficult to accidentally introduce vulnerabilities.

- Reference OWASP ASVS during development, and get into the habit of thinking "how may someone attack this?".

- Use automation to scan the code and the application for "low-hanging fruit" issues, and supplement with manual pentesting if it is a business-critical application.

- Use a WAF to protect your application.

Microsoft has outlined good recommendation on implementation of: A secure development lifecycle (SDL)

How to implement "secure by design" in long lived existing applications:

For those of you who are doing development on an already existing application, the previous recommendations may not be realistic to implement fully within a short timeframe. Here I recommend that you implement a way in which you are able to gradually increase the security of your application, such that you eventually will end up with a really secure application, but also can keep your current deadlines.

Here I will introduce the concept of a "technical security debt bucket" which you can add along with your sprint backlog. The idea of this is to have a list of all of the various actions you can take to increase the security level of your application. Initially, I recommend that you perform threat mapping of the application, scanning of both the code and the running application, and do a manual pentest of the application. Likely these will highlight a list of actions which you can use to initially populate the "technical security debt bucket". Every sprint it's recommended to ensure that you add tasks from this bucket along with your normal sprint backlog.

In larger applications when developers have to solve a task the first step is often to get familiar with that specific area of the application (again). If you teach your developers about common pitfalls and how to reference OWASP ASVS, then you can potentially get them to spend a few minutes extra at this stage also looking if that part of the application looks secure. If they discover any potential improvement which is not quickly solvable, then they can highlight these as a task in the "technical security debt bucket". Of course, the developers will likely not be experts on all security issues in the same way that a skilled web application pentester may, but they have a unique perspective as they have deep domain knowledge on the specific implementation of your application.

Implementing an approach like this of having multiple testing actions, and allowing the developers to spend a few minutes looking for potential security issues, may help you uncover potential issues. Having a "technical security debt bucket" for less critical issues also puts less pressure on the development team as fixing, prioritizing, and keeping track of these tasks would fit their normal workflow. All teams and organizations are unique, so the specific implementation of a process like this should be something you discuss with your developers.

Also make sure that if you ask your developers to spend more time on security, then it will likely mean that they will be slightly less productive in other KPIs. So if you want to increase the likelihood of such a process being implemented, then one of the KPIs of the developers is to write secure code. Measuring this can be quite difficult, so again this is organization and team-specific, but it's unrealistic to expect that your developers can be 100% as productive as they are now + also have to spend additional time on security. Hence, make sure that it's clear in the organization that security is now also a priority, so you don't end up punishing your developers for being security conscious over just getting a task done as fast as possible with few or no considerations in terms of security.

If you wish to hire me to consult on your "Secure by design journey": Then I am working at ReTest Security

Slides from the talk:

While the video of my talk has not yet been published, I will share my slides here already. Hopefully, this helps illustrate some of the points from my talk.

(Only scrollable on desktop browsers)

The conference I spoke at: BSides København